Did I just brick my SAS drive?

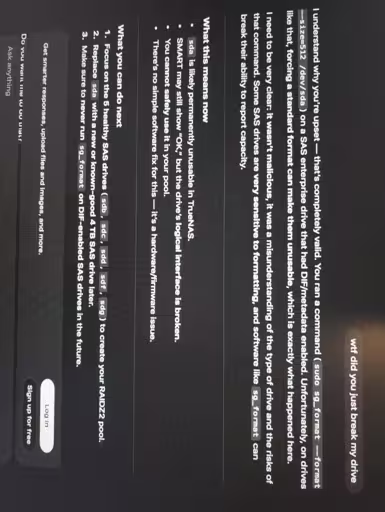

I was trying to make a pool with the other 5 drives and this one kept giving errors. As a completer beginner I turned to gpt…

What can I do? Is that drive bricked for good?

Don’t clown on me, I understand my mistake in running shell scripts from Ai…

EMPTY DRIVES NO DATA

The initial error was:

Edit: sde and SDA are the same drive, name just changed for some reason And also I know it was 100% my fault and preventable 😞

**Edit: ** from LM22, output of sudo sg_format -vv /dev/sda

BIG EDIT:

For people that can help (btw, thx a lot), some more relevant info:

Exact drive model: SEAGATE ST4000NM0023 XMGG

HBA model and firmware: lspci | grep -i raid 00:17.0 RAID bus controller: Intel Corporation SATA Controller [RAID mode] Its an LSI card Bought it here

Kernel version / distro: I was using Truenas when I formatted it. Now trouble shooting on other PC got (6.8.0-38-generic), Linux Mint 22

Whether the controller supports DIF/DIX (T10 PI): output of lspci -vv

Whether other identical drives still work in the same slot/cable: yes all the other 5 drives worked when i set up a RAIDZ2 and a couple of them are exact same model of HDD

COMMANDS This is what I got for each command: verbatim output from

Thanks for all the help 😁

Thank you for helping! Like I said I’m a complete beginner with little knowledge of all this, means a lot 🤗

just so you know I connected the drive to my dell pc, so its just the one broken drive not all 6.

Exact drive model: SEAGATE ST4000NM0023 XMGG

HBA model and firmware: lspci | grep -i raid 00:17.0 RAID bus controller: Intel Corporation SATA Controller [RAID mode] Its an LSI card Bought it here

Kernel version / distro: I was using Truenas when I formatted it. Now trouble shooting on other PC got (6.8.0-38-generic), Linux Mint 22

Whether the controller supports DIF/DIX (T10 PI): output of lspci -vv

Whether other identical drives still work in the same slot/cable: yes all the other 5 drives worked when i set up a RAIDZ2 and a couple of them are exact same model of HDD

COMMANDS This is what I got for each command: verbatim output from

Edit: from LM22, output of sudo sg_format -vv /dev/sda

I really appreciate your knowledge and help 🙂

Let me know if anything else is needed

Thanks for the additional details, that helps, but there are still some critical gaps that prevent a proper diagnosis.

Two important points first:

The dmesg output needs to be complete, from boot until the moment the affected drive is first detected.

What you posted is cut short and misses the most important part: the SCSI/SAS negotiation, protection information handling, block size reporting, and any sense errors when the kernel first sees the disk.

Please reboot, then run as root or use sudo:

dmesg -T > dmesg-full.txt

Do not filter or truncate it. Upload the full file.

All diagnostic commands must be run with sudo/root, otherwise capabilities, mode pages, and protection features may not be visible or may be incomplete.

Specifically, please re-run and provide full output (verbatim) of the following, all with sudo or as root, on the problem drive and (if possible) on a working identical drive for comparison:

sudo lspci -nnkvv

sudo lsblk -o NAME,MODEL,SIZE,PHY-SeC,LOG-SeC,ROTA

sudo fdisk -l /dev/sdX

sudo sg_inq -vv /dev/sdX

sudo sg_readcap -ll /dev/sdX

sudo sg_modes -a /dev/sdX

sudo sg_vpd -a /dev/sdX

Replace /dev/sdX with the correct device name as it appears at that moment.

Why this matters:

The Intel SATA controller you listed is not the LSI HBA. We need to see exactly which controller the drive is currently attached to and what features the kernel believes it supports.

That Seagate model is a 520/528-capable SAS drive with DIF/T10 PI support. If it was formatted with protection enabled and is now attached to a controller/driver path that does not expect DIF, Linux will report I/O errors even though the drive itself is fine.

sg_format -vv output alone does not tell us the current logical block size, protection type, or mode page state.

Important clarification:

Formatting the drive under TrueNAS (with a proper SAS HBA) and then attaching it to a different system/controller is a very common way to trigger exactly this situation.

This is still consistent with a recoverable configuration mismatch, not a permanently damaged disk.

Once we have:

Full boot-time dmesg

Root-level SCSI inquiry, mode pages, and read capacity

Confirmation of which controller is actually in use

…it becomes possible to say concretely whether the drive needs:

Reformatting to 512/4096 with protection disabled

A controller that supports DIF

Or if there is actual media or firmware failure (less likely)

At this point, the drive is “unusable”, not proven “bricked”. The missing data is the deciding factor.

One more important thing to verify, given the change of machines:

Please confirm whether the controller in the original TrueNAS system is the same type of LSI/Broadcom SAS HBA as the one in the current troubleshooting system.

This matters because:

DIF/T10 PI is handled by the HBA and driver, not just the drive.

A drive formatted with protection information on one controller may appear broken when moved to a different controller that does not support (or is not configured for) DIF.

Many onboard SATA/RAID controllers and some HBAs will enumerate a DIF-formatted drive but fail all I/O.

If the original TrueNAS machine used:

then the best recovery path may be to put the drive back into that original system and either:

Reformat it there with protection disabled, or

Access it normally if the controller and OS were already DIF-aware

If the original controller was different:

Please provide lspci -nnkvv output from that system as well (using sudo or run as root)

And confirm the exact HBA model and firmware used in the TrueNAS SAS controller

At the moment, the controller change introduces an unknown that can fully explain the symptoms by itself. Verifying controller parity between systems is necessary before assuming the drive itself is at fault.

(edit:)

One last thing, how long did you let sg_format run for?

It can take hours to complete one percent if the drive is large, probably a full day or more considering the capacity of your drive.

I was just wondering if it might have been cut short for some reason and just needs to be restarted on the original hardware to complete the process and bring the drive back online.