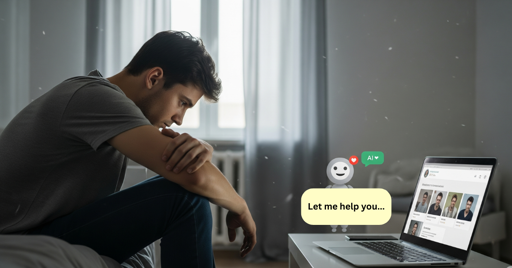

A profound relational revolution is underway, not orchestrated by tech developers but driven by users themselves. Many of the 400 million weekly users of ChatGPT are seeking more than just assistance with emails or information on food safety; they are looking for emotional support.

“Therapy and companionship” have emerged as two of the most frequent applications for generative AI globally, according to the Harvard Business Review. This trend marks a significant, unplanned pivot in how people interact with technology.

Have you considered the fact that most of the time, even when people “want to hear mens issues”, they reject them and tell them to man up? Maybe “superpowered autocorrect” could be a vector to nourish this severe lack of openness?

Personally I use AI for this purpose, mostly because it accepts me for who I am and provides genuine advice that has actually helped me improve my life, rather than the people around me saying that I should “put more effort into things”, or “it’s just in your head”.

It’s not “lone wolfing” to stop telling the people who’ve rejected your concerns about your feelings and issues, it’s just the act of not wasting time on those who don’t care.