Eskuero

I like sysadmin, scripting, manga and football.

- 4 Posts

- 44 Comments

From the thumbnail I though linus was turned into a priest

8·1 month ago

8·1 month agoI run changedetection and monitor the samples .yml files projects usually host directly at their git repos

1·1 month ago

1·1 month agoBring back my computer as well

2·1 month ago

2·1 month agopeople say go back in time to pick the correct lotto number

I say go back in time and sell my 8TB disk for 80 billion

3·2 months ago

3·2 months agoFor the price of the car I would expect the SSD drive to grow wheels and be able to actually drive it

2·2 months ago

2·2 months agogpt-oss:20b is only 13GB

5·2 months ago

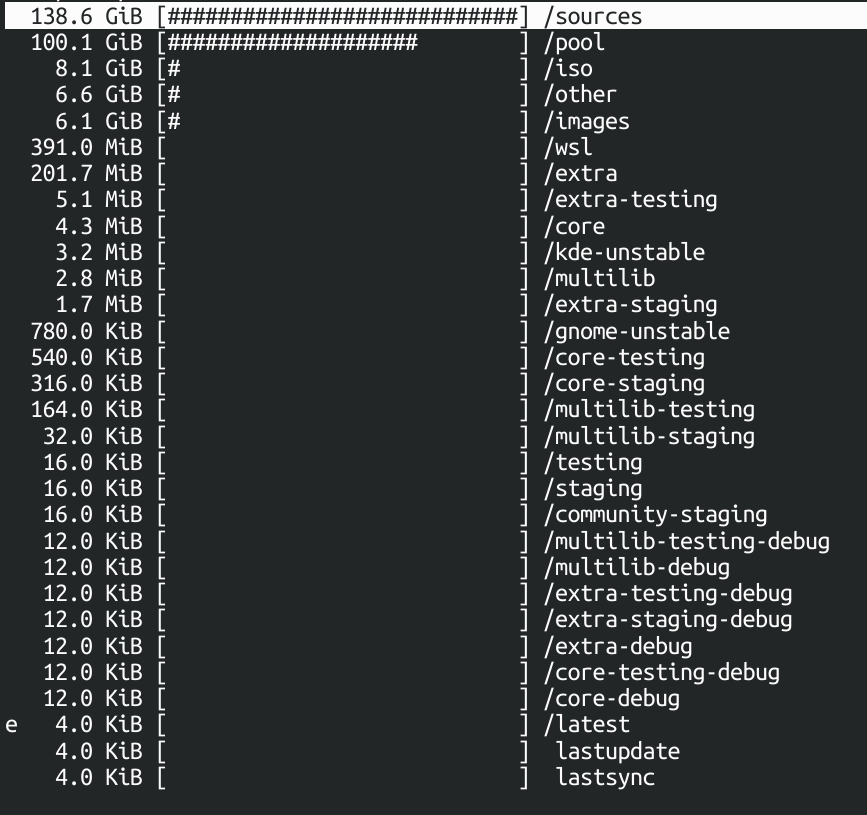

5·2 months agoThis includes everything for a total of 261G

5·2 months ago

5·2 months agoI have 4 Arch machines so I actually hold a local mirror of the entire arch repo in my homeserver and just sync from there at full speed

10·2 months ago

10·2 months agoollama works fine on my 9070 XT.

I tried gpt-oss:20b and it gives around 17tokens per second which seems as fast a reply as you can read.

Idk how to compares to the nvidia equivalent tho

4·3 months ago

4·3 months agojust added

155·3 months ago

155·3 months agoThe north doesn’t need backups because errors do not happen there /s

I tried it in the past and it felt too heavy for my use case. Also for some reason the sidebar menu doesn’t show all the items at all times but instead keeps only showing the ones related to the branch you just went into.

Also it seems pretty dead updates wise

Mdbook is really nice if you mind the lack of dyanimic editing in a web browser

1·3 months ago

1·3 months agodeleted by creator

23·4 months ago

23·4 months agoI have a Pixel 9 Pro which is supposed to get security updates until 2031 but at the pace Google is closing Android down I wonder if it will even be viable to stay on an AOSP degoogled ROM until then.

I feel like the future is leading us to a place where we will have to reduce our mobile computing to a trusted but slow and unreliable main phone while keeping a secondary mainstream device for banking/government apps.

15·5 months ago

15·5 months agoPixel 8A you can get probably very affordable now and will get updates until 2031. It’s more likely that by that time you will have dropped your phone and break it or simply want a hardware upgrade.

It also supports playing AV1 in hardware even at 4k resolution.

17·6 months ago

17·6 months agoI always run headscale on my own server for my own network.

2·6 months ago

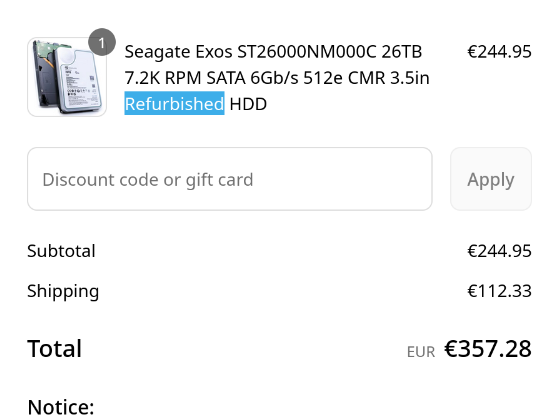

2·6 months agoThat’s not included VAT kek

And for some reason it’s always been this pricy with serverpartdeals, other stuff from USA not so much

81·6 months ago

81·6 months agoServer part deals have great prices on the hardware.

But the shipping to my location for some reason is 120€, a 50% increase of the product wtf

is the warehouse in the moon or what!

echo 'dXIgbW9tCmhhaGEgZ290dGVtCg==' | base64 -d