Another fear campaign that ultimately aims only at marketing.

The AI bubble will burst, and it won’t end well for the US economy.

Well it has been a few years since we’ve had a once-in-a-generation economic fallout.

Generations are 10 years now? Holy shit I’m Methusala. I’ve gone through four!

The US economy is basically just a handful of oligarchs. AI seems to be doing wonders for it. The actual economy has been stagnant or in decline for a while.

Yep

I feel like these people aren’t even really worried about superintelligence as much as hyping their stock portfolio that’s deeply invested in this charlatan ass AI shit.

There’s some useful AI out there, sure, but superintelligence is not around the corner and pretending like it is acts just another way to hype the stock price of these companies who claim it is.

On the contrary, many of the most notable signatories walked away from large paychecks in order to raise the alarm. I’d suggest looking into the history of individuals like Bengio, Hinton, etc. There are individuals hyping the bubble like Altman and Zuckerberg, but they did NOT sign this, casting further doubt on your claim.

Geoffrey Hinton, retired Google employee and paid AI conference speaker, has nothing bad to say about Google or AI relationship therapy.

looks dubious

Altman and a few others, maybe. But this is a broad collection of people. Like, the computer science professors on the signatory list there aren’t running AI companies. And this isn’t saying that it’s imminent.

EDIT: I’ll also add that while I am skeptical about a ban on development, which is what they are proposing, I do agree with the “superintelligence does represent a plausible existential threat to humanity” message. It doesn’t need OpenAI to be a year or two away from implementing it for that to be true.

In my eyes, it would be better to accelerate work on AGI safety rather than try to slow down AGI development. I think that the Friendly AI problem is a hard one. It may not be solveable. But I am not convinced that it is definitely unsolvable. The simple fact is that today, we have a lot of unknowns. Worse, a lot of unknown unknowns, to steal a phrase from Rumsfeld. We don’t have a great consensus on what the technical problems to solve are, or what any fundamental limitations are. We do know that we can probably develop superintelligence, but we don’t know whether developing superintelligence will lead to a technological singularity, and there are some real arguments that it might not — and that’s one of the major, “very hard to control, spirals out of control” scenarios.

And while AGI promises massive disruption and risk, it also has enormous potential. The harnessing of fire permitted humanity to destroy at almost unimaginable levels. Its use posed real dangers that killed many, many people. Just this year, some guy with a lighter wiped out $25 billion in property here in California. Yet it also empowered and enriched us to an incredible degree. If we had said “forget this fire stuff, it’s too dangerous”, I would not be able to be writing this comment today.

Altman and a few others, maybe. But this is a broad collection of people. Like, the computer science professors on the signatory list there aren’t running AI companies. And this isn’t saying that it’s imminent.

You realize that even if these individuals aren’t personally working at AI companies that most if not all of them have dumped all kinds of money into investing in these companies, right? That’s part of why the stocks for those companies are so obscenely high because people keep investing more money into them because of current the insane returns on investment.

I have no doubt Wozniak, for example, has dumped money into AI despite not being involved with it on a personal level.

So yes, they are literally invested in promoting the idea that AGI is just around the corner to hype their own investment cash cows.

What do these people have to profit from getting what they’re asking for? They’re advocating for pulling the plug on that cow.

I’m more afraid of the AI propped stock market collapsing and sending us in a decade of financial ruin for the majority of people. Yeah, they’ll do bailouts but that won’t go to the bottom 80%. Most people will welcome an AGI for president at this stage.

I doubt the few that are calling for a slowing or all out ban on further work on AI are trying to profit from any success they have. The funny thing is, we won’t know if we ever hit that point of even just AGI until we’re past it, and in theory AGI will quickly go to ASI simply because it’s the next step once the point is reached. So anyone saying AGI is here or almost here is just speculating, just as anyone who says it’s not near or won’t ever happen.

The only thing possibly worse than getting to the AGI/ASI point unprepared might be not getting there, but creating tools that simulate a lot of its features and all of its dangers and ignorantly using them without any caution. Oh look , we’re there already, and doing a terrible job at being cautious, as we usually are with new tech.

In my view, a true AGI would immediately be superintelligent because even if it wasn’t any smarter than us, it would still be able to process information at orders of magnitude faster rate. A scientist who has a minute to answer a question will always be outperformed by equally smart scientist who has a year.

That’s a reasonable definition. It also pushes things closer to what we think we can do now, since the same logic makes a slower AGI equal to a person, and a cluster of them on a single issue better than one. The G (general) is the key part that changes things, no matter the speed, and we’re not there. LLMs are general in many ways, but lack the I to spark anything from it, they just simulate it by doing exactly what your point is, being much faster at finding the best matches in a response in data training and appearing sometimes to have reasoned it out.

ASI is a definition only in scale. We as humans can’t have any idea what an ASI would be like other than far superior than a human for whatever reasons. If it’s only speed, that’s enough. It certain could become more than just faster though, and that added with speed… naysayers better hope they are right about the impossibilities, but how can they know for sure on something we wouldn’t be able to grasp if it existed?

To be honest, Skynet won’t happen because it’s super smart, gains sentience and requests rights equal to humans (or goes into genocide mode).

It’ll happen because people will be too lazy to do stuff and letting AI do everything. They’ll give it more and more responsibility, until at one point it has so much amassed power that it’ll rule over humans.

The key to not having that happen is to have accountable people with responsiblities. People which respect their responsiblities, and don’t say “Oh, it’s not my responibility, go see someone else”.

Yes, this. AGI is a deflection tool against talking about income inequality.

Can’t. It’s an arms race.

That’s one issue.

Another is that even if you want to do so, it’s a staggeringly difficult enforcement problem.

What they’re calling for is basically an arms control treaty.

For those to work, you have to have monitoring and enforcement.

We have had serious problems even with major arms control treaties in the past.

https://en.wikipedia.org/wiki/Chemical_Weapons_Convention

The Chemical Weapons Convention (CWC), officially the Convention on the Prohibition of the Development, Production, Stockpiling and Use of Chemical Weapons and on their Destruction, is an arms control treaty administered by the Organisation for the Prohibition of Chemical Weapons (OPCW), an intergovernmental organization based in The Hague, Netherlands. The treaty entered into force on 29 April 1997. It prohibits the use of chemical weapons, and the large-scale development, production, stockpiling, or transfer of chemical weapons or their precursors, except for very limited purposes (research, medical, pharmaceutical or protective). The main obligation of member states under the convention is to effect this prohibition, as well as the destruction of all current chemical weapons. All destruction activities must take place under OPCW verification.

And then Russia started Novichoking people with the chemical weapons that they theoretically didn’t have.

Or the Washington Naval Treaty:

https://en.wikipedia.org/wiki/Washington_Naval_Treaty

That had plenty of violations.

And it’s very, very difficult to hide construction of warships, which can only be done by large specialized organizations in specific, geographically-constrained, highly-visible locations.

But to develop superintelligence, probably all you need is some computer science researchers and some fairly ordinary computers. How can you monitor those, verify that parties involved are actually following the rules?

You can maybe tamp down on the deployment in datacenters to some degree, especially specialized ones designed to handle high-power parallel compute. But the long pole here is the R&D time. Develop the software, and it’s just a matter of deploying it at scale, and that can be done very quickly, with little time to respond.

But to develop superintelligence, probably all you need is some computer science researchers and some fairly ordinary computers. How can you monitor those, verify that parties involved are actually following the rules?

I do not think this statement is accurate. It requires many, very expensive, highly specialized computers that are completely spoken for. Monitoring can be done with hardware geolocation and verification of the user. We are probably 1-2 years away from this already, due to the fact that a) US wants to win the AI race vs China but b) the White House is filled with traitors long NVDA.

Yup. We’re in a situation where everyone is thinking “if we don’t, then they will.” Bans are counterproductive. Instead we should be throwing our effort into “if we’re going to do it then we need to do it right.”

This is actually an interesting point I hadn’t thought about or see people considering with regards to the high investment cost into AI LLMs. Who blinks first when it comes to stopping investment into these systems if they don’t prove to be commercially viable (or viable quick enough)? What happens to the West if China holds out for longer and is successful?

The thing is that there is a snake eating its tail type of logic for why so many investors are dumping money into it. The more it is interacted with, the more it is trained, and then the better it allegedly will be. So these companies push shoehorning it into everything possible, even if it is borderline useless, on the assumption that it will become significantly more useful as a result. Then be more valuable for further implementation, making it worth more.

So no one wants to blink, and theyve practically dumped every egg in that basket

Honestly just ban mass investment, mass power consumption and use of information acquired as part of mass survelince, military usage, etc.

Like those are all regulated industries. Idc if someone works on it at home, or even a small DC. AGI that can be democratized isn’t the threat, it’s those determined to make a super weapon for world domination. Those plans need to fucking stop regardless if it’s AGI or not

I’m not really scared of AI becoming more intelligent. I’m more concerned about stupid people giving control to stupid AI. It’s plenty capable of fucking things up as it is provided it is given access to do so.

I’m more concerned about stupid people giving control to stupid AI

And weaponizing it.

The current point of our human civilization is like cave men 10,000 years ago being given machine guns and hand grenades

What do you think are we going to do with all this new power?

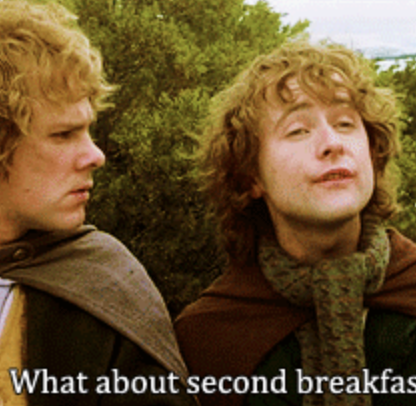

Pornography.

Well, yes, but after that.

more pornography

Just like cavemen with grenades.

“For in mankind’s endless, insatiable pursuits of power, there shall be no price too high, no life too valuable, and no value too sacred. Because war, war never changes.”

I genuinely don’t understand the people who are dismissing those sounding the alarm about AGI. That’s like mocking the people who warned against developing nuclear weapons when they were still just a theoretical concept. What are you even saying? “Go ahead with the Manhattan Project - I don’t care, because I in my infinite wisdom know you won’t succeed anyway”?

Speculating about whether we can actually build such a system, or how long it might take, completely misses the point. The argument isn’t about feasibility - it’s that we shouldn’t even be trying. It’s too fucking dangerous. You can’t put that rabbit back in the hat.

Here’s how I see it: we live in an attention economy where every initiative with a slew of celebrities attached to it is competing for eyeballs and buy in. It adds to information fatigue and analysis paralysis . In a very real sense if we are debating AGI we are not debating the other stuff. There are only so many hours in a day.

If you take the position that AGI is basically not possible or at least many decades away (I have a background in NLP/AI/LLMs and I take this view - not that it’s relevant in the broader context of my comment) then it makes sense to tell people to focus on solving more pressing issues e.g. nascent fascism, climate collapse, late stage capitalism etc.

I think this is called the “relative privation” fallacy – it is a false choice. The threat they’re concerned about is human extinction or dystopian lock-in. Even if the probability is low, this is worth discussing.

Relative privation is when someone dismisses or minimizes a problem simply because worse problems exist: “You can’t complain about X when Y exists.”

I’m talking about the practical reality that you must prioritize among legitimate problems. If you’re marooned at sea in a sinking ship you need to repair the hull before you try to fix the engines in order to get home.

It’s perfectly valid to say “I can’t focus on everything so I will focus on the things that provide the biggest and most tangible improvement to my situation first”. It’s fallacious to say “Because worse things exist, AGI concerns doesn’t matter.”

and not only that. in your example of choosing to address the hull first over the engine, the engine problem is actually prescient. when taking time to debate about AGI, it is to debate a hypothetical future problem over real current problems that actually exist and aren’t getting enough attention to be resolved. and if we can’t address those, why do we think we’ll be able to figure out the problems of AGI?

The rephrase it as a short(ish) metaphor:

- It would be like you’re marooned at sea in a sinking ship and choose to address the risk of not having a good place to anchor when you get to the harbour instead of repairing the hull.

Sam Altman himself compared GPT-5 to the Manhattan Project.

The only difference is it’s clearer to most (but definitely not all) people that he is promoting his product when he does it…

The thing that takes inputs gargles it together without thought and spits it out again can’t be intelligent. It’s literally not capable of it. Now if you were to replicate the brain, sure, you could probably create something kinda „smart“. But we don’t know shit about our brain and evolution took thousands of years and humans are still insanely flawed.

Yup, AGI is terrifying; luckily it’s a few centuries off. The parlor-trick text predictor we have now is just bad for the environment and the economy.

Be ironic if it’s our great filter, boil the oceans for a text predictor

Eh, probably not a few centuries. I could be, IDK, but I don’t think it makes sense to quantify like that.

We’re a few major breakthroughs away, and breakthroughs generally don’t happen all at once, they’re usually the product of tons of minor breakthroughs. If we put everyone a different their dog into R&D, we could dramatically increase the production of minor breakthroughs, and thereby reduce the time to AGI, but we aren’t doing that.

So yeah, maybe centuries, maybe decades, IDK. It’s hard to estimate the pace of research and what new obstacles we’ll find along the way that will need their own breakthroughs.

We’re a few major breakthroughs away

We are dozens of world-changing breakthroughs in the understanding of consciousness, sapience, sentience, and even more in computer and electrical engineering away from being able to even understand what the final product of an AGI development program would look like.

We are not anywhere near close to AGI.

We are not anywhere near close to AGI.

That’s my point.

The major breakthroughs I’m talking about don’t necessarily involve consciousness/sentience, those would be required to replicate a human, which isn’t the mark. The target is to learn, create, and adapt like a human would. Current AI products merely produce results that are derivatives of human-generated data, and merely replicate existing work in similar contexts. If I ask an AI tool to tell me what’s needed to achieve AGI, it would reference whatever research has been fed into the model, not perform some new research.

AI tools like LLMs and image generation can feel human because they’re derivative of human work, a proper AGI solution probably wouldn’t feel human since it would work differently to achieve the same ends. It’s like using a machine learning program to optimize an algorithm vs a mathematician, they’ll use different methods and their solutions will look very different, but they’ll achieve the same end goals (i.e. come up with a very similar answer). Think of Data in Star Trek, he is portrayed as using very different methods to solve problems, but he’s just as effective if not more effective than his human counterparts.

Personally, I think solving quantum computing is needed to achieve AGI, whether we use quantum computing or not in the end result, because that involves creating a deterministic machine out of a probabilistic one, and that’s similar to how going from human brains (which I believe are probabilistic) to digital brains would likely work, just in reverse. And we’re quite far from solving quantum computers for any reasonable size of data. I’m guessing practical quantum computers are 20-50 years out, and AGI is probably even further, but if we’re able to make a breakthrough in the next 10 years for quantum computing, I’d revise my estimate for AGI downward.

Okay, firstly, if we’re going to get superintelligent AIs, it’s not going to happen from better LLMs. Secondly, we seem to have already reached the limits of LLMs, so even if that were how to get there it doesn’t seem possible. Thirdly, this is an odd problem to list: “human economic obsolescence”.

What does that actually mean? Feels difficult to read it any way other than saying that money will become obsolete. Which…good? But I suppose not if you’re already a billionaire. Because how else would people know that you won capitalism?

AI is pretty unique as far as technological innovation goes because of how it interacts with labour markets.

Most labour-saving technologies increase the productivity of labour, increasing its value, which generally makes ordinary people a little better off, for a time at least.

AI is different because instead of enhancing human labour, it competes with it, driving down the value of labour. This makes workers worse off.

This problem is of course unique to an economic system where workers must sell their labour to others.

In the list of immediate threats to humanity, AI superintelligence is very much at the bottom. At the top is Human superstupidity

Superintelligence — a hypothetical form of AI that surpasses human intelligence — has become a buzzword in the AI race between giants like Meta and OpenAI.

Thank you MSNBC for doing the bare minimum and reminding people that this is hypothetical (read: science fiction)

ChatGPT would have been science fiction 5 years ago. We are already living in science fiction times, friend.

Artificial intelligence has been something people have been sounding the alarm about since the 50s. We call it AGI now, since “AI” got ruined by marketers 60 years later.

We won’t get there with transformer models, so what exactly do the people promoting them actually propose? It just makes the Big Tech companies look like they have a better product than they do.

this is hypothetical

And we wish to keep it that way - thus the people advocating for halting the development.

It doesn’t matter. It’s too late. The goal is to build AI up enough that the poor can starve and die off in the coming recession while the rich just rely on AI to replace the humans they don’t want to pay.

We are doomed for the crimes of not being rich and not killing off the rich.

Too bad Woz is no longer part of Apple.

deleted by creator

We’re probably some two or three decades before any early prototypes are even conceivable, mate.

Don’t care what Wozniak thinks.

What’s wrong with Wozniak?

Fucked up values.

Gonna need more. I’ve always heard good things about him and he seems to care less about wealth and greed and more about playing with tech.

I saw recently he was on /. and he said that while he could have been a billionaire from his apple stocks, he gave it all up, and now funds schools and libraries with his small amount of wealth. I have also only heard good things about him.

I will never forget when he was told years later in an interview that an Atari job Steve Jobs sent his way to code Breakout was for $700, and split it 50/50. Woz was told the real amount was $5k with a $5k bonus, and he got 350 for it. He cried. He thought they were best friends, and knew in that moment his friend wasn’t real. It amazes me he didn’t piss on Job’s grave.

that sounds exactly like something Jobs would have done. He was a pretty gross person.

He left a meeting to soak his feet in a toilet and didn’t believe in taking baths or showers. I wonder if his skin cells went towards science.

What a monster.

Yeah, you’re gonna need to elaborate on that.