A talk from the hacker conference 39C3 on how AI generated content was identified via a simple ISBN checksum calculator (in English).

A cylindrical human centipede is a really good analogy to how LLMs work.

Sort of related, I was fact checking some of the content on my own website that had been provided by someone who later turned out to be less than reliable.

There was one claim I was completely unable to find a source for and suspected it was an AI hallucination. Turned to chatgpt and tried to find a source with that and it provided nearly the exact same sentence and cited my website as the source thus completing the hallucination cycle.

I just deleted it all from my site and started over.

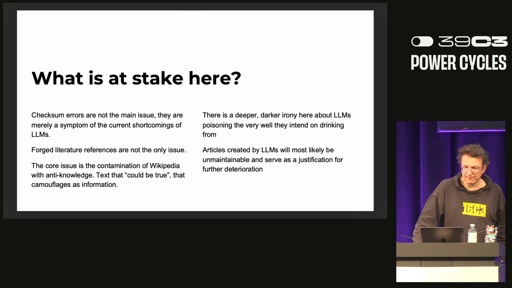

He notes that LLM vendors have been training their models on Wikipedia content. But if the content contains incorrect information and citations, you get the sort of circular (incorrect) reference that leads to misinformation.

One irony, he says, is that LLM vendors are now willing to pay for training data unpolluted by the hallucinated output their own products generate.

TL;DW:

He wrote checksum verifier for ISBN and discovered AI generated content on Wikipedia with hallucinated sources. He used Claude to write the checksum verifier and the irony is not lost on him. He tracked down those who submitted the fake articles and determined many are doing so out of a misplaced desire to help, without an understanding of the limitations and pitfalls of using LLM gen content without verification.

What’s the irony?

He used AI to write the anti-AI tool

Heads up he talks about this specifically at 26:30 for those who didn’t take the time to watch the video.

Which is kinda my favorite thing to do as of late, and what I prefer AI be used for.

I’m not taking about building a suite of black box tools. But tiny scripts to scrape, shape, and generate reports. Things I used to pull in a dozen node libraries to do and manually configure and patch up.

You know, busy work.

To be fair, humans are excellent at building anti-human tools

Now if there’s one thing you can be sure of, it’s that nothing is more powerful than a young boy’s wish. Except an Apache helicopter. An Apache helicopter has machine guns AND missiles. It is an unbelievably impressive complement of weaponry, an absolute death machine.

But wasn’t the issue with the AI stuff that it was false info, whereas the tool sounds like it worked as intended?

Here’s the explanation of the irony in this situation from an LLM ;)

Side note: I’d only thought about the LLM generated code irony. I’d missed the 2nd irony of the editors trying to be helpful in providing useful accurate knowledge but achieving the opposite.

It just seems like the tool he is using is working though…

Yes. Why are you fixated on this? LLMs are tools and they work, but you have to understand their abilities and limitations to use them effectively.

The guy who needed the anti-ai tool, did. The Wikipedia editors, didn’t.

I feel like it would be a lot more ironic if the tool didn’t work. It doesn’t seem very ironic to use hammer to remove nails hammered into incorrect position with a hammer imo.

Yes, but the specific type of irony that this situation fits the definition of does not come from whether or not the tool they used worked for the intended purpose. The irony comes from the fact that they are relying on the output from LLM-generated content (ISBN checksum calculator) to determine the reliability of other LLM-generated content (hallucinated ISBN numbers).

Irony is a word that has a somewhat vague meaning and is often interpreted differently. If the tool they used did not work as intended and flagged a bunch of real ISBNs as being AI generated, the situation would (I think) be more ironic. They are still using AI to try and police AI, but with the additional layer of the outcome being the opposite of their intention.

But how does that diminish the irony? The story is still ironic as a whole, even though he achieved his goals.

I would feel like it would be ironic if it was after AI in general instead of the mistakes, I dunno

Right, but it’s also the same/similar tool that’s being used to damage the article with bad information. Like the LLM said, this is using the poison for the cure (also, amusing that we’re using the poison to explain the situation as well).

Yes, he’s using the tool (arguably) how it was designed and is working as intended, but so are the users posting misinformation. The tool is designed to take a prompt and spit out a mathematically appropriate response using words and symbols we interpret as language - be it a spoken or programming language.

Any tool can be used properly and also for malicious/bad via incompetent methods.

But in this case the tool actually works well for one thing and not so well for another. It doesn’t feel that ironic to use a hammer to remove nails someone has hammered to the wrong place, if some sort of analogy is required here. You’d use a hammer because it is good at that job.

I think the point is it would have been truly ironic if the AI itself was the authoritative fact checker instead of merely being a tool that built another tool.

If Claude was the fact checking tool instead of the ISBN validator, that’s the real irony.

If in a messed up future, only an AI could catch a fellow AI, what’s stopping the AI collective from returning false negatives? Who watches the watchers?

WHY poison Wikipédia with AI? I don’t get it, what satisfaction does a person get from adding text they didn’t write to Wikipédia? It’s not like you get a gold medal, or your neighbor sees you as a hero, or you get a discount at McDonald’s, there’s no glamour on being an editor, so why even bother if you’re not going to write quality content out of passion?

Please leave Wikipédia alone, you don’t have to shove your AI bullshit everywhere, please leave somewhere in this god forsaken internet alone. Why must AI bros touch everything.

He talks about the reasons why people are doing this at 21:30 in the video.

It’s possibly from people trying to help, but don’t understand AI hallucinations.

For example a Wikipedia article might say, “John Smith spent a year Oxford University before moving to London.[Citation Needed]” So the article already contains information, but lacks proper citation.

Someone comes along and says, "Ah ha! AI can solve this and asks AI, ‘Did John Smith spend a year at Oxford before moving to London, please provide citations.’ and the AI returns, “Yes of course he did according to the book ‘John Smith: Biography of a Man’ ISBN 123456789”

So someone adds that as a citation and now Wikipedia has been improved.

Or… has it? The ISBN 123456789 is invalid. No book could possibly have that number. If the ISBN is invalid, then the book is also likely invalid, and the citation is also invalid.

So the satisfaction was someone who couldn’t previously help Wikipedia, now thinking they can help Wikipedia. At face value that’s a good thing, someone who wants to help Wikipedia. The problem is that they think they’re helping, but they’re actually harming.

I thought wikipedia mods are overly zealous with checking submissions by new users.

Seemingly not so. Kinda worrisomeThe problem is that the volume of slop available completely overwhelms all efforts at quality control. Zealotry only goes so far at turning back the tsunami of shite.

People like to help, they don’t know that LLMs generate bullshit. This should answer the “why”.

People go to libraries asking for books that don’t even exist and some think that the libraries are hiding them from them, but the ISBNs are just halucinations… 😅

You could just verify all ISBNs are valid on each edit, which would have some value in finding typos, but not doing that leaves this honeypot that could be used to identify AI slop accounts. Clever. Though maybe they’re wise to it now.

He also points out that there are many ISBNs that are “wrong”, but are actually correct in the real world. This is because publishers don’t always understand about the checksum and just increment the ISBN when publishing a new book. In many library systems there is this checkbox next to the ISBN entry field where you can say something like “I understand this ISBN is wrong, but it is correct in the real world”.

So just flagging wrong ISBNs would lead to a lot of false positives and would need specific structures to deal with that.

That’s extremely frustrating. Like, it’s literally your job to get that number correct…

People frustrate me

Watch out for Mr. Perfect here. Ffs

I think most of the contributors aren’t going to get wife to it, because they have no idea what they are doing is wrong.

Can someone tl;dw me? I’m curious as to how comment gets tied to isbn

Not watched yet, but I suspect AI edits are using hallucinated citations with ISBNs that don’t even pass a checksum test. AI may improve on this if someone trains them about ISBNs better, but it’s cool if this sort of test weeds some slop out for now.

Correct.

Can someone tl;dw me? I’m curious as to how comment gets tied to isbn