Explanation for newbies:

-

Shell is the programming language that you use when you open a terminal on linux or mac os. Well, actually “shell” is a family of languages with many different implementations (bash, dash, ash, zsh, ksh, fish, …)

-

Writing programs in shell (called “shell scripts”) is a harrowing experience because the language is optimized for interactive use at a terminal, not writing extensive applications

-

The two lines in the meme change the shell’s behavior to be slightly less headache-inducing for the programmer:

set -euo pipefailis the short form of the following three commands:set -e: exit on the first command that fails, rather than plowing through ignoring all errorsset -u: treat references to undefined variables as errorsset -o pipefail: If a command piped into another command fails, treat that as an error

export LC_ALL=Ctells other programs to not do weird things depending on locale. For example, it forcesseqto output numbers with a period as the decimal separator, even on systems where coma is the default decimal separator (russian, dutch, etc.).

-

The title text references “posix”, which is a document that standardizes, among other things, what features a shell must have. Posix does not require a shell to implement

pipefail, so if you want your script to run on as many different platforms as possible, then you cannot use that feature.

Gotta love a meme that comes with a

manpage!People say that if you have to explain the joke then it’s not funny. Not here, here the explanation is part of the joke.

It is different in spoken form, written form (chat) and written as a post (like here).

In person, you get a reaction almost immediately. Written as a short chat, you also get a reaction. But like this is more of an accessibility thing rather than the joke not being funny. You know, like those text descriptions of an image (usually for memes).

This is much better than a man page. Like, have you seen those things?

I really recommend that if you haven’t, that you look at the Bash’s man page.

It’s just amazing.

I think you mean a

manzing.I’ll see myself out.

Come to think of it, I probably have never done so. Now I’m scared.

I’m divided between saying it’s really great or that it should be a book and the man page should be something else.

Good thing man has search, bad thing a lot of people don’t know about that.

set -euo pipefailis, in my opinion, an antipattern. This page does a really good job of explaining why. pipefail is occasionally useful, but should be toggled on and off as needed, not left on. IMO, people should just write shell the way they write go, handling every command that could fail individually. it’s easy if you write adiefunction like this:die () { message="$1"; shift return_code="${1:-1}" printf '%s\n' "$message" 1>&2 exit "$return_code" } # we should exit if, say, cd fails cd /tmp || die "Failed to cd /tmp while attempting to scrozzle foo $foo" # downloading something? handle the error. Don't like ternary syntax? use if if ! wget https://someheinousbullshit.com/"$foo"; then die "failed to get unscrozzled foo $foo" fiIt only takes a little bit of extra effort to handle the errors individually, and you get much more reliable shell scripts. To replace -u, just use shellcheck with your editor when writing scripts. I’d also highly recommend https://mywiki.wooledge.org/ as a resource for all things POSIX shell or Bash.

I’ve been meaning to learn how to avoid using pipefail, thanks for the info!

Putting

or die “blah blah”after every line in your script seems much less elegant than op’s solutionThe issue with

set -eis that it’s hideously broken and inconsistent. Let me copy the examples from the wiki I linked.

Or, “so you think set -e is OK, huh?”

Exercise 1: why doesn’t this example print anything?

#!/usr/bin/env bash set -e i=0 let i++ echo "i is $i"Exercise 2: why does this one sometimes appear to work? In which versions of bash does it work, and in which versions does it fail?

#!/usr/bin/env bash set -e i=0 ((i++)) echo "i is $i"Exercise 3: why aren’t these two scripts identical?

#!/usr/bin/env bash set -e test -d nosuchdir && echo no dir echo survived#!/usr/bin/env bash set -e f() { test -d nosuchdir && echo no dir; } f echo survivedExercise 4: why aren’t these two scripts identical?

set -e f() { test -d nosuchdir && echo no dir; } f echo survivedset -e f() { if test -d nosuchdir; then echo no dir; fi; } f echo survivedExercise 5: under what conditions will this fail?

set -e read -r foo < configfile

And now, back to your regularly scheduled comment reply.

set -ewould absolutely be more elegant if it worked in a way that was easy to understand. I would be shouting its praises from my rooftop if it could make Bash into less of a pile of flaming plop. Unfortunately ,set -eis, by necessity, a labyrinthian mess of fucked up hacks.Let me leave you with a allegory about

set -ecopied directly from that same wiki page. It’s too long for me to post it in this comment, so I’ll respond to myself.From https://mywiki.wooledge.org/BashFAQ/105

Once upon a time, a man with a dirty lab coat and long, uncombed hair showed up at the town police station, demanding to see the chief of police. “I’ve done it!” he exclaimed. “I’ve built the perfect criminal-catching robot!”

The police chief was skeptical, but decided that it might be worth the time to see what the man had invented. Also, he secretly thought, it might be a somewhat unwise move to completely alienate the mad scientist and his army of hunter robots.

So, the man explained to the police chief how his invention could tell the difference between a criminal and law-abiding citizen using a series of heuristics. “It’s especially good at spotting recently escaped prisoners!” he said. “Guaranteed non-lethal restraints!”

Frowning and increasingly skeptical, the police chief nevertheless allowed the man to demonstrate one robot for a week. They decided that the robot should patrol around the jail. Sure enough, there was a jailbreak a few days later, and an inmate digging up through the ground outside of the prison facility was grabbed by the robot and carried back inside the prison.

The surprised police chief allowed the robot to patrol a wider area. The next day, the chief received an angry call from the zookeeper. It seems the robot had cut through the bars of one of the animal cages, grabbed the animal, and delivered it to the prison.

The chief confronted the robot’s inventor, who asked what animal it was. “A zebra,” replied the police chief. The man slapped his head and exclaimed, “Curses! It was fooled by the black and white stripes! I shall have to recalibrate!” And so the man set about rewriting the robot’s code. Black and white stripes would indicate an escaped inmate UNLESS the inmate had more than two legs. Then it should be left alone.

The robot was redeployed with the updated code, and seemed to be operating well enough for a few days. Then on Saturday, a mob of children in soccer clothing, followed by their parents, descended on the police station. After the chaos subsided, the chief was told that the robot had absconded with the referee right in the middle of a soccer game.

Scowling, the chief reported this to the scientist, who performed a second calibration. Black and white stripes would indicate an escaped inmate UNLESS the inmate had more than two legs OR had a whistle on a necklace.

Despite the second calibration, the police chief declared that the robot would no longer be allowed to operate in his town. However, the news of the robot had spread, and requests from many larger cities were pouring in. The inventor made dozens more robots, and shipped them off to eager police stations around the nation. Every time a robot grabbed something that wasn’t an escaped inmate, the scientist was consulted, and the robot was recalibrated.

Unfortunately, the inventor was just one man, and he didn’t have the time or the resources to recalibrate EVERY robot whenever one of them went awry. The robot in Shangri-La was recalibrated not to grab a grave-digger working on a cold winter night while wearing a ski mask, and the robot in Xanadu was recalibrated not to capture a black and white television set that showed a movie about a prison break, and so on. But the robot in Xanadu would still grab grave-diggers with ski masks (which it turns out was not common due to Xanadu’s warmer climate), and the robot in Shangri-La was still a menace to old televisions (of which there were very few, the people of Shangri-La being on the average more wealthy than those of Xanadu).

So, after a few years, there were different revisions of the criminal-catching robot in most of the major cities. In some places, a clever criminal could avoid capture by wearing a whistle on a string around the neck. In others, one would be well-advised not to wear orange clothing in certain rural areas, no matter how close to the Harvest Festival it was, unless one also wore the traditional black triangular eye-paint of the Pumpkin King.

Many people thought, “This is lunacy!” But others thought the robots did more good than harm, all things considered, and so in some places the robots are used, while in other places they are shunned.

The end.

This is great and thanks for taking the time to enlighten us 😄

No worries! Bash was my first language, and I still unaccountably love it after 15 years. I hate it and say mean things about it, but I’m usually pleased when I get to write some serious Bash.

Woah, that

((i++))triggered a memory I forgot about. I spent hours trying to figure out what fucked up my$?one day.When I finally figured it out: “You’ve got to be kidding me.”

When i fixed with

((++i)): “SERIOUSLY! WTAF Bash!”Exercise 6:

set -e f() { false; echo survived; } if ! f; then :; fiThat one was fun to learn.

Even with all the jank and unreliability, I think

set -edoes still have some value as a last resort for preventing unfortunate accidents. As long as you don’t use it for implicit control flow, it usually (exercise 6 notwithstanding) does what it needs to do and fails early when some command unexpectedly returns an error.I personally don’t believe there’s a case for it in the scripts I write, but I’ve spent years building the

|| diehabit to the point where I don’t even think about it as I’m writing. I’ll probably edit my post to be a little less absolute, now that I’m awake and have some caffeine in me.One other benefit I forgot to mention to explicit error handling is that you get to actually log a useful error message. Being able to

rg 'failed to scrozzle foo.* because service y was not available'and immediately find the exact line in the script that failed is so nice. It’s not quite a stack trace with line numbers, but it’s much nicer than what you have with bash by default or with set -e.

After tens of thousands of bash lines written, I have to disagree. The article seems to argue against use of -e due to unpredictable behavior; while that might be true, I’ve found having it in my scripts is more helpful than not.

Bash is clunky. -euo pipefail is not a silver bullet but it does improve the reliability of most scripts. Expecting the writer to check the result of each command is both unrealistic and creates a lot of noise.

When using this error handling pattern, most lines aren’t even for handling them, they’re just there to bubble it up to the caller. That is a distraction when reading a piece of code, and a nuisense when writing it.

For the few times that I actually want to handle the error (not just pass it up), I’ll do the “or” check. But if the script should just fail, -e will do just fine.

This is why I made the reference to Go. I honestly hate Go, I think exceptions are great and very ergonomic and I wish that language had not become so popular. However, a whole shitload of people apparently disagree, hence the popularity of Go and the acceptance of its (imo) terrible error handling. If developers don’t have a problem with it in Go, I don’t see why they’d have a problem with it in Bash. The error handling is identical to what I posted and the syntax is shockingly similar. You must unpack the return of a func in Go if you’re going to assign, but you’re totally free to just assign an err to

_in Go and be on your way, just like you can ignore errors in Bash. The objectively correct way to write Go is to handle everyerrthat gets returned to you, either by doing something, or passing it up the stack (and possibly wrapping it). It’s a bunch of bubbling up. My scripts end up being that way too. It’s messy, but I’ve found it to be an incredibly reliable strategy. Plus, it’s really easy for me to grep for a log message and get the exact line where I encountered an issue.This is all just my opinion. I think this is one of those things where the best option is to just agree to disagree. I will admit that it irritates me to see blanket statements saying “your script is bad if you don’t set -euo pipefail”, but I’d be totally fine if more people made a measured recommendation like you did. I likely will never use set -e, but if it gets the bills paid for people then that’s fine. I just think people need to be warned of the footguns.

EDIT: my autocorrect really wanted to fuck up this comment for some reason. Apologies if I have a dumb number of typos.

Yeah, while

-ehas a lot of limitations, it shouldn’t be thrown out with the bathwater. The unofficial strict mode can still de-weird bash to an extent, and I’d rather drop bash altogether when they’re insufficient, rather than try increasingly hard to work around bash’s weirdness. (I.e. I’d throw out the bathwater, baby and the family that spawned it at that point.)

Yup, and

set -ecan be used as a try/catch in a pinch (but your way is cleaner)I was tempted for years to use it as an occasional try/catch, but learning Go made me realize that exceptions are amazing and I miss them, but that it is possible (but occasionally hideously tedious) to write software without them. Like, I feel like anyone who has written Go competently (i.e. they handle every returned

erron an individual or aggregated basis) should be able to write relatively error-handled shell. There are still the billion other footguns built directly into bash that will destroy hopes and dreams, but handling errors isn’t too bad if you just have a littlediefunction and the determination to use it.“There are still the billion other footguns built directly into bash that will destroy hopes and dreams, but”

That’s well put. I might put that at the start of all of my future comments about

bashin the future.Yep. Bash was my first programming language so I have absolutely stepped on every single one of those goddamn pedblasters. I love it, but I also hate it, and I am still drawn to using it.

This joke comes with more documentation than most packages.

Lol, I love that someone made this. What if your input has newlines tho, gotta use that NUL terminator!

God, I wish more tools had nice NUL-separated output. Looking at you,

jq. I dunno why this issue has been open for so long, but it hurts me. Like, they’ve gone back and forth on this so many times…~$ man !!

Does this joke have a documentation page?

unironically, yes

Shell is great, but if you’re using it as a programming language then you’re going to have a bad time. It’s great for scripting, but if you find yourself actually programming in it then save yourself the headache and use an actual language!

Your scientists were so preoccupied with whether they could, they didn’t stop to think if they should

Honestly, the fact that bash exposes low level networking primitives like a TCP socket via /dev/TCP is such a godsend. I’ve written an HTTP client in Bash before when I needed to get some data off of a box that had a fucked up filesystem and only had an emergency shell. I would have been totally fucked without /dev/tcp, so I’m glad things like it exist.

EDIT: oh, the article author is just using netcat, not doing it all in pure bash. That’s a more practical choice, although it’s way less fun and cursed.

EDIT: here’s a webserver written entirely in bash. No netcat, just the /bin/bash binary https://github.com/dzove855/Bash-web-server

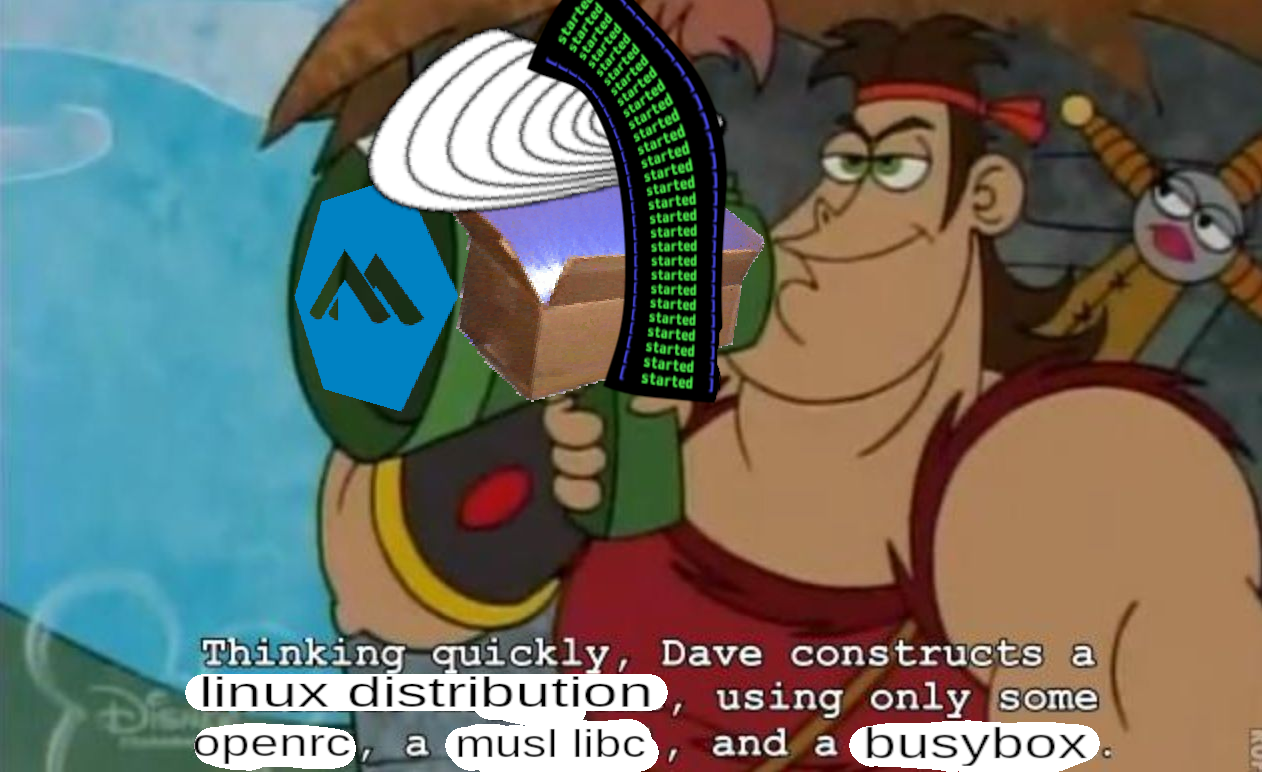

Alpine linux, one of the most popular distros to use inside docker containers (and arguably good for desktop, servers, and embedded) is held together by shell scripts, and it’s doing just fine. The installer, helper commands, and init scripts are all written for busybox sh. But I guess that falls under “scripting” by your definition.

Aka busybox in disguise 🥸

The few times I’ve used shell for programming it was in strict work environments where anything compiled was not allowed without a ton of red tape.

Wouldn’t something interpreted like python be a better solution?

For more complicated input/output file handling, certainly.

Little shell scripts do great though if all you need to do is concatenate files by piping them.

It’s like the Internet, it’s not one big truck but a series of tubes.

Yep, in my mind piping together other commands is scripting not programming, exactly what shell scripts are for!

With enough regex and sed/awk you might be able to make even complicated stuff work. I’m not a regex guru (but I do occasionally dabble in the dark arts).

I feel attacked. https://github.com/fmstrat/gam

So what IS the difference between scripting and programming in your view?

No clear line, but to me a script is tying together other programs that you run, those programs themselves are the programs. I guess it’s a matter of how complex the logic is too.

I didn’t know the locale part…

I learned about it the hard way lol.

seqused to generate a csv file in a script. My polish friend runs said script, and suddenly there’s an extra column in the csv…I had os-prober fail if locale was Turkish, the seperator thing could explain that.

Powershell is the future

- Windows and office365 admins

I even use powershell as my main scripting language on my Mac now. I’ve come around.

That’s sounds terrible honestly

I’m so used to using powershell to handle collections and pipelines that I find I want it for small scripts on Mac. For instance, I was using ffmpeg to alter a collection of files on my Mac recently. I found it super simple to use Powershell to handle the logic. I could have used other tools, but I didn’t find anything about it terrible.

A lot of people call

set -euo pipefailthe “strict mode” for bash programming, as a reference to;in JavaScript.In other words, always add this if you want to stay sane unless you’re a shellcheck user.

People call

set -euo pipefailstrict mode but, it’s just another footgun in a language full of footguns. Shellcheck is a fucking blessing from heaven though. I wish I could forcibly install it on every developer’s system.

Nushell has pipefail by default (plus an actual error system that integrates with status codes) and has actual number values, don’t have these problems

nushell is pretty good. I use it for my main shell

although, i still prefer writing utilities in python over nu scripts

Nushell is great as a more powerful scripting language, but a proper language like python is useful too

I’m fine with my shell scripts not running on a PDP11.

My only issue is

-u. How do you print help text if your required parameters are always filled. There’s no way to test for -z if the shell bails on the first line.Edit: though I guess you could initialise your vars with bad defaults, and test for those.

#!/bin/bash set -euo pipefail if [[ -z "${1:-}" ]] then echo "we need an argument!" >&2 exit 1 fiGod I love bash. There’s always something to learn.

my logical steps

- #! yup

- if sure!

- [[ -z makes sense

- ${1:-} WHAT IN SATANS UNDERPANTS… parameter expansion I think… reads docs … default value! shit that’s nice.

it’s like buying a really simple generic car then getting excited because it actually has a spare and cupholders.

Yeah, there’s also a subtle difference between

${1:-}and${1-}: The first substitutes if1is unset or""; the second only if1is unset. So possibly${foo-}is actually the better to use for a lot of stuff, if the empty string is a valid value. There’s a lot to bash parameter expansion, and it’s all punctuation, which ups the line noise-iness of your scripts.I don’t find it particularly legible or memorable; plus I’m generally not a fan of the variable amount of numbered arguments rather than being able to specify argument numbers and names like we are in practically every other programming language still in common use.

That’s good, but if you like to name your arguments first before testing them, then it falls apart

#!/bin/bash set -euo pipefail myarg=$1 if [[ -z "${myarg}" ]] then echo "we need an argument!" >&2 exit 1 fiThis fails. The solution is to do

myarg=${1:-}and then testEdit: Oh, I just saw you did that initialisation in the if statement. Take your trophy and leave.

Yeah, another way to do it is

#!/bin/bash set -euo pipefail if [[ $# -lt 1 ]] then echo "Usage: $0 argument1" >&2 exit 1 fii.e. just count arguments. Related,

fishhas kind of the orthogonal situation here, where you can name arguments in a better way, but there’s noset -ufunction foo --argument-names bar ... endin the end my conclusion is that argument handling in shells is generally bad. Add in historic workarounds like

if [ "x" = "x$1" ]and it’s clear shells have always been Shortcut City

Side note: One point I have to award to Perl for using

eq/lt/gt/etcfor string comparisons and==/</>for numeric comparisons. In shells it’s reversed for some reason? The absolute state of things when I can point to Perl as an example of something that did it betterPerl is the original GOAT! It took a look at shell, realised it could do (slightly) better, and forged its own hacky path!

I was about to say, half the things people write complex shell scripts for, I’ll just do in something like Perl, Ruby, Python, even node/TS, because they have actual type systems and readability. And library support. Always situation-dependent though.

I have written 5 shell scripts ever, and only 1 of them has been more complex than “I want to alias this single command”

I can’t imagine being an actual shell dev

only 1 of them has been more complex than “I want to alias this single command”

I have some literal shell aliases that took me hours to debug…

I do not envy you.

Nah, it’s in the past.

But people, if you are writing a command that detects a terminal to decide to color its output or not, please add some overriding parameters to it.

It really isn’t bad especially if you use ash

I was never a fan of

set -e. I prefer to do my own error handling. But, I never understood why pipefail wasn’t the default. A failure is a failure. I would like to know about it!IIRC if you pipe something do head it will stop reading after some lines and close the pipe, leading to a pipe fail even if everything works correctly

Yeah, I had a silly hack for that. I don’t remember what it was. It’s been 3-4 years since I wrote bash for a living. While not perfect, I still need to know if a pipeline command failed. Continuing a script after an invisible error, in many cases, could have been catastrophic.

just use python instead.

- wrap around

subprocess.run(), to call to system utils - use

pathlib.Pathfor file paths and reading/writing to files - use

shutil.which()to resolve utilities from yourPathenv var

Here’s an example of some python i use to launch vscode (and terminals, but that requires

dbus)from pathlib import Path from shutil import which from subprocess import run def _run(cmds: list[str], cwd=None): p = run(cmds, cwd=cwd) # raises an error if return code is non-zero p.check_returncode() return p VSCODE = which('code') SUDO = which('sudo') DOCKER = which('docker') proj_dir = Path('/path/to/repo') docker_compose = proj_dir / 'docker/' windows = [ proj_dir / 'code', proj_dir / 'more_code', proj_dir / 'even_more_code/subfolder', ] for w in windows: _run([VSCODE, w]) _run([SUDO, DOCKER, 'compose', 'up', '-d'], cwd=docker_compose)deleted by creator

that is a little more complicated

p.communicate()will take a string (or bytes) and send it to the stdin of the process, then wait forpto finish executionthere are ways to stream input into a running process (without waiting for the process to finish), but I don’t remember how off the top of my head

from shutil import which from subprocess import Popen, PIPE, run from pathlib import Path LS = which('ls') REV = which('rev') ls = run([LS, Path.home()], stdout=PIPE) p = Popen([REV], stdin=PIPE, stdout=PIPE) stdout, stderr = p.communicate(ls.stdout) print(stdout.decode('utf-8'))

Or Rust. Use Command::new() for system commands and Path::new() for paths.

- wrap around